4. GNATfuzz User’s Guide¶

4.1. Introduction¶

GNATfuzz provides fuzz-testing facilities for Ada projects. The main features it provides are:

Identifying subprograms that are suitable for automated fuzz-testing (referred to as automatically fuzzable subprograms).

Generating fuzz-test harnesses that receive binary encoded data, invoke the subprogram under test, and report run-time anomalies.

Generating fuzz-test initial test cases.

Building and executing fuzz-testing campaigns controlled by user-defined stopping criteria.

Real-time test coverage analysis through integration with GNATcoverage.

These features are available both via the command line and through integration with the GNAT Studio IDE.

The fuzzing engine in GNATfuzz is based on AFL++, a popular open-source and highly configurable fuzzer, and an instrumentation plugin shipped as part of the GNAT Pro toolchain.

The primary goal of GNATfuzz is to abstract a lot of the technical details needed to set up, run, and understand the outcome of a fuzzing session on an application.

4.1.1. Fuzz testing in a nutshell¶

Note

In the context of GNATfuzz, we refer to the project or program that you’re targeting with fuzz testing as the “system under test”.

Fuzzing (or fuzz testing) in the context of software is an automated testing technique that, based on some initial input test cases (the corpus) for a program, will automatically and repeatedly run tests and generate new test cases at a very high frequency to detect faulty behavior of the system under test. Such erroneous behavior is captured by monitoring the system for triggered exceptions, failing built-in assertions, and signals such as SIGSEGV (which shouldn’t occur in Ada programs unless they’re erroneous).

Fuzz testing is now widely associated with cybersecurity. It has proven to be an effective mechanism for finding corner-case code vulnerabilities that traditional human-driven verification mechanisms, such as unit and integration testing, can miss. Such code vulnerabilities can often lead to malicious exploitations.

Fuzz testing comes in different flavors. Black-box fuzz testing refers

to the approach of randomly generating test cases without taking into

account any information about the internal structure, functional behavior,

or execution of the system under test. AFL++’s native execution mode is

considered a grey box fuzzer because it obtains code coverage by using code

instrumentation information. This information helps the mutation and

generation phase of the fuzzer generate test cases that have a higher

probability of increasing code coverage than randomly generated test cases

would. This instrumentation is added automatically by GNATfuzz during the

fuzz mode, explained in detail later in this guide.

4.1.2. AFL++ basics¶

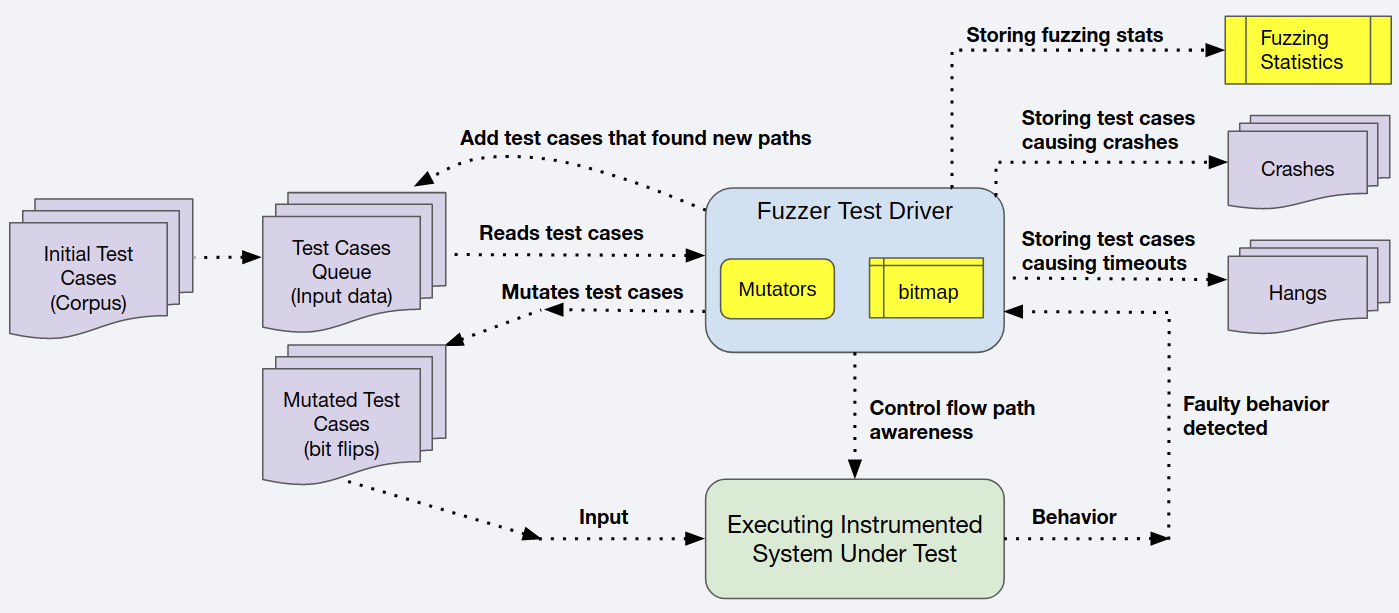

This section contains a very high-level overview of AFL++, a highly-configurable, instrumentation-guided genetic fuzzer. A GCC plugin, enabled via the “afl-gcc-fast” compiler driver directive, generates efficient code instrumentation that enables AFL++ to process GCC-compiled code. Once the system under test is compiled with the AFL++ GCC plugin, you can use the “afl-fuzz” fuzzing driver to run a fuzzing session. The different basic elements and phases involved in an AFL++ fuzzing session are described in Fig. 4.1

Note

This document is not intended to be a substitute for the AFL++ documentation or that of its GCC plugin extensions, but rather to provide some information needed to understand AFL++ and how it works. In this document, we mostly focus on the added value of the GNATfuzz toolchain. For more information about AFL++, you can visit the AFL++ official site.

Fig. 4.1 Overview of an AFL++ fuzz testing session¶

Prior to starting a fuzzing session, you must provide some initial test cases, called the corpus, each in a separate file. Starting a fuzzing session moves the corpus to what’s called the test cases queue, which isn’t really a queue, but instead a directory containing a list of files, each representing a test case. AFL++ adds tests to the queue, but never removes any from it.

The details are complex, but in general, for each test case in the queue, AFL++ uses one or more internal or external mutators to create multiple new test cases (it can create between hundreds and tens of thousands of new tests from each test in the queue), each of which it executes on the system under test. It monitors each execution for any faulty behavior, as describe above, and moves any test cases that cause such behavior to a directory called crashes. AFL++ provides a configuration parameter which sets a timeout for each test. If the test exceeds the timeout, it’s killed and stored in the session’s hangs directory. Saving these tests allows you to replay and investigate these problematic test cases.

While running each test, AFL++ collects all the instrumentation data and updates its bitmap, an array global to the session where each index represents an instrumentation point added to the system under test during its compilation by the GCC AFL++ plugin. Whenever an instrumentation point is executed, its corresponding index in the bitmap is incremented. The bitmap enables the fuzzing session to be path-coverage aware.

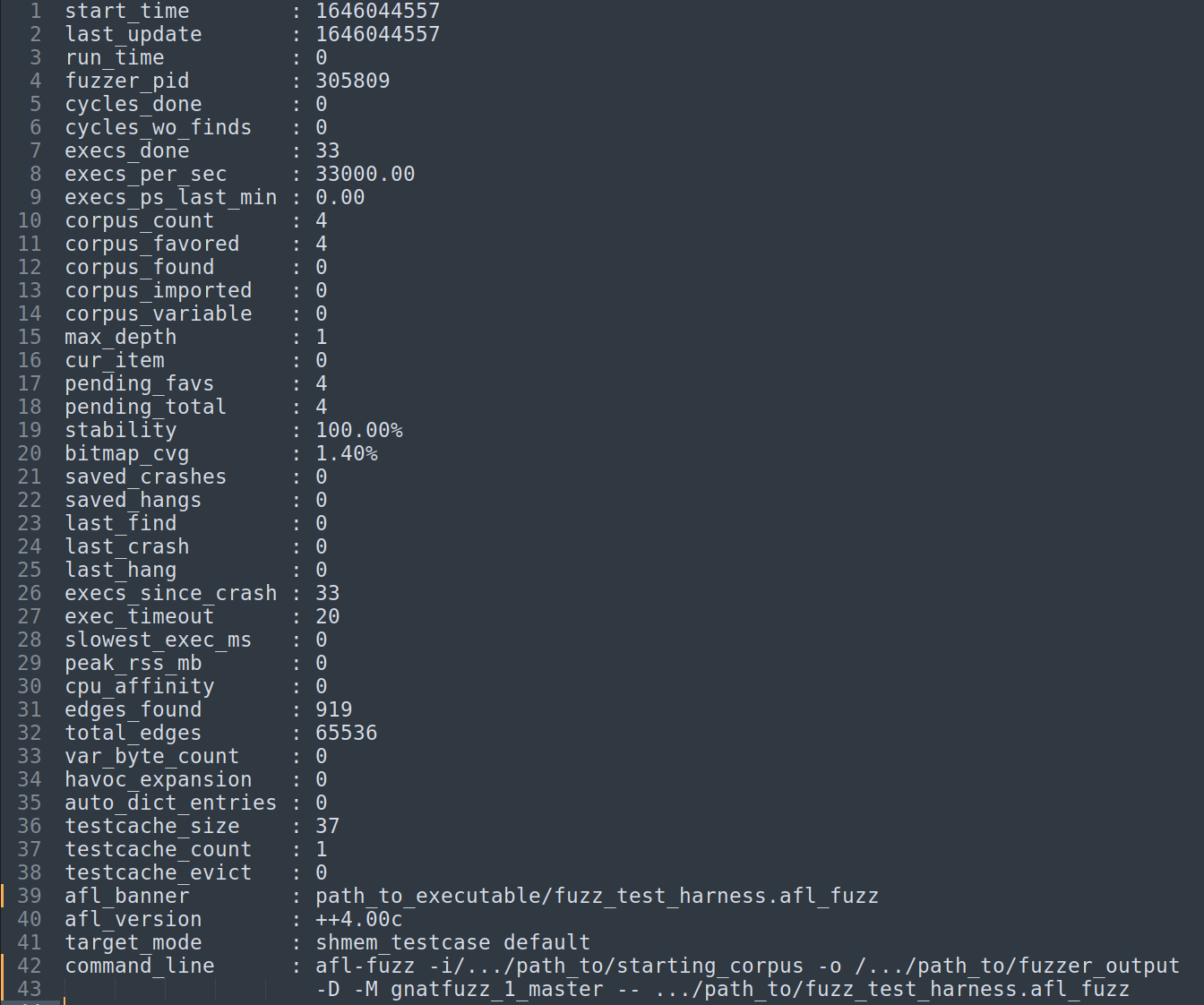

If a mutated test case executes a new path, it’s considered a good candidate to further increase the coverage when mutated and is added to the queue so AFL++ will generate further mutations of that test. The fuzzing session cycle counter is increased when all the test cases in the queue have been through the fuzzing process at least once. The cycle counter is part of the fuzzing statistics that GNATfuzz produces and stores during a session. An example file containing these statistics is shown below:

Fig. 4.2 Overview of an AFL++ fuzzer_stats file (produced by afl-fuzz++3.00a)¶

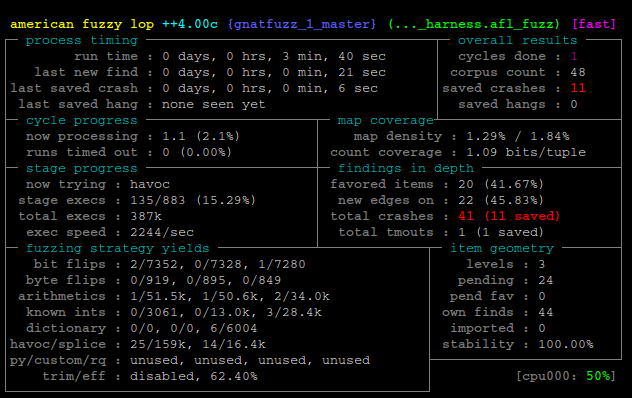

Some of these statistics are also included in the fuzzing session information terminal (which can be optionally shown during the execution of GNATfuzz):

Fig. 4.3 Fuzzing session information terminal example. (produced by afl-fuzz++3.00a)¶

Some of the most important statistics are:

start_time - start time of afl-fuzz (as a unix time)

last_update - last update of this file (as a unix time)

fuzzer_pid - PID of the fuzzer process

cycles_done - queue cycles completed so far

execs_done - number of execution calls to the system under test

execs_per_sec - current number of executions per second

corpus_count - total number of entries in the queue

corpus_found - number of entries discovered through local fuzzing

corpus_imported - number of entries imported from other instances

max_depth - number of levels in the generated data set

cur_item - entry number currently being processed

pending_favs - number of favored entries still waiting to be fuzzed

pending_total - number of all entries waiting to be fuzzed

stability - percentage of bitmap bytes that behave consistently

corpus_variable - number of test cases showing variable behavior

unique_crashes - number of unique crashes recorded

unique_hangs - number of unique hangs encountered

The complete list of all the statistics generated by AFL++ and the description of each can be found here. These statistics are meant to assist you in making informed decisions on when to stop a fuzzing session. Typically, based on the statistics, you can identify when a fuzzing session has plateaued, meaning that GNATfuzz has no further potential to explore new execution paths in the system under test.

Setting up, running, and stopping a fuzzing session can be challenging. You must build and execute a coverage campaign that allows you to understand the impact of fuzz-testing on your project. There are many steps to this. First, you must construct an adequate starting corpus. The quality of the starting corpus can significantly affect the fuzzing session’s results. The more meaningful the starting corpus is as an input to the system under test, the more coverage GNATfuzz can achieve. After providing the corpus, you need to wrap the system under test in a suitable test harness. Next, you have to build the wrapped system with the GCC AFL++ plugin to add instrumentation to the code. Then you need to call the command that starts the fuzzing session with all the required configuration flags and actively monitor it to decide if it has performed enough fuzzing.

AFL++ fuzzing is primarily used to fuzz programs that accept files as inputs. This makes it more challenging to use AFL++ as a form of unit-level vulnerability testing. The current built-in mutators will mutate test cases at the binary level and are unaware of any structure within the test cases. This can be problematic when, for example, it mutates static data that should not be changed, such as an array’s upper and lower bounds - their mutation can result in corruption of the test-case data resulting in a wasted mutation cycle.

4.1.3. GNATfuzz overview¶

GNATfuzz utilizes several AdaCore technologies to provide ease of use and enhanced fuzzing capability for Ada and SPARK programs. Fuzzing Ada and SPARK code has a significant advantage over fuzzing other traditional, less memory-safe languages, like C. Ada’s extended runtime constraint checks can capture faulty behavior, such as overflows, which often go undetected in C. This provides a significant advantage because the software assurance and security confidence that can be achieved when fuzzing Ada and SPARK programs is higher than when fuzzing C programs.

In a nutshell, GNATfuzz abstracts away the complexity of the building, executing, and stopping fuzzing campaigns and enables unit-level-based fuzz-testing for Ada and SPARK programs by:

Automating the detection of fuzzable subprograms.

Automating the creation of the test-harness for a fuzzable subprogram.

Automating the creation and minimization of the starting corpus.

Allowing for simple specification of criteria for stopping the session and monitoring how those criteria are being met during the session.

Leveraging the GNATcoverage tool to provide coverage information during or after a fuzzing session.

Supporting multicore fuzzing.

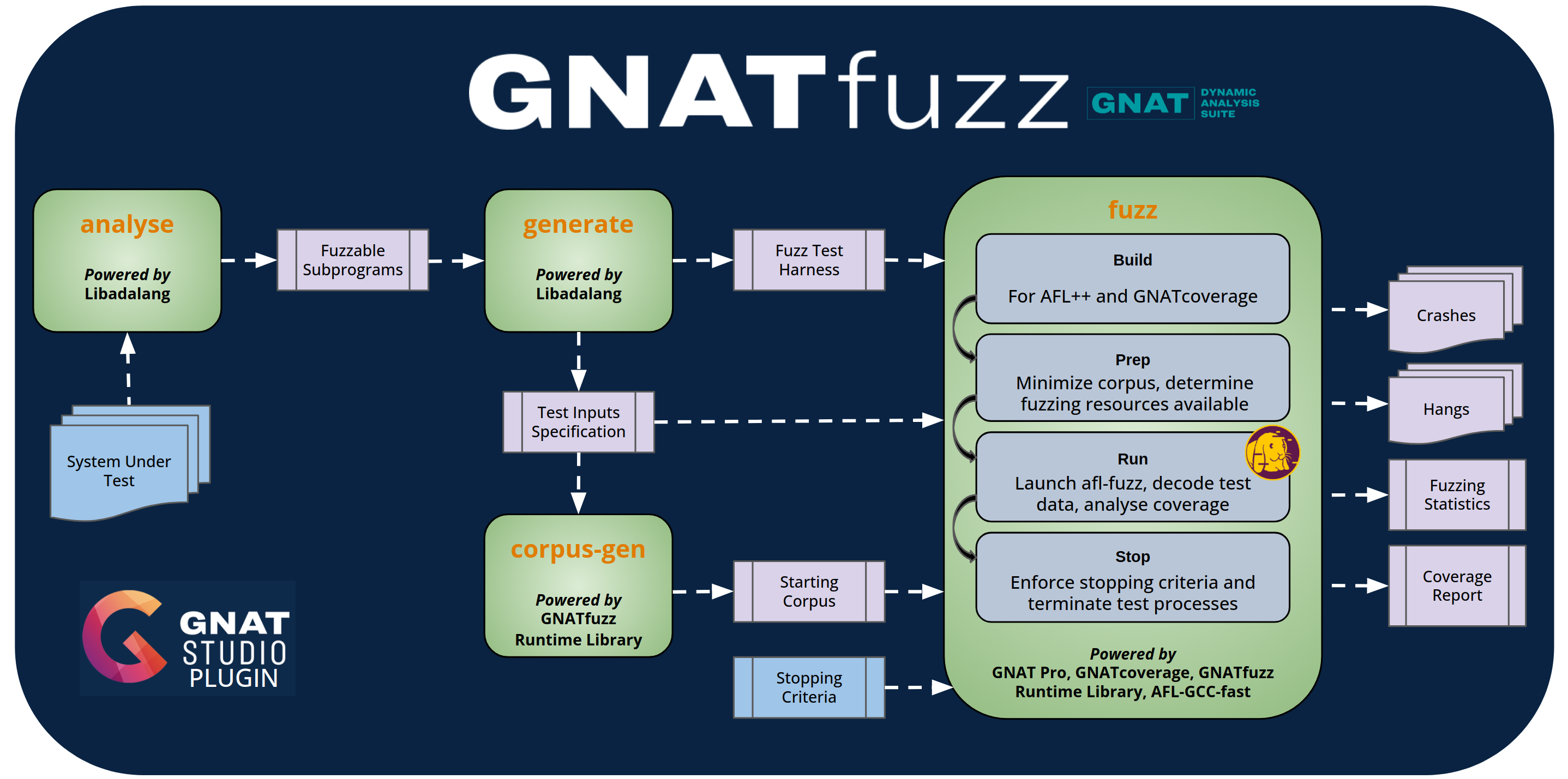

Fig. 4.4 GNATfuzz overview¶

Fig. 4.4 gives an overview of the GNATfuzz toolchain. GNATfuzz offers several modes which you can use to largely automate the manual activities needed for setting up and running a fuzz-test campaign. The green boxes in Fig. 4.4 represent the four main operating modes, of GNATfuzz: analyze, generate, corpus-gen, and fuzz.

The gnatfuzz command line interface provides complete access to these features and the IDE integration in GNAT Studio provides a full UI workflow to drive the command line interface.

We start by giving a very high-level description of the GNATfuzz workflow and then provide details about the information footprints of each GNATfuzz mode and how each works in later sections.

The workflow shown in Fig. 4.4 is intended for unit-level fuzz testing. In this scenario, you first identify fuzzable subprograms within the system under test. You do this by specifying the project file of the system under test as an argument to the analyze mode of GNATfuzz. This mode scans the project for all fuzzable subprograms and lists them in a JSON file, including the source file and line information for each. Subprograms considered to be fuzzable are those for which the GNATfuzz generate mode can automatically generate a starting corpus. See Section 4.3.2 for the auto-generation capabilities of the current version of GNATfuzz.

You then select one of these fuzzable subprograms and specify it as an argument to the generate mode of GNATfuzz. This mode creates a test harness for that subprogram, including all the execution scripts needed to run the fuzzing campaign and the input-data specifications for the subprogram. Based on these input-data specifications, you can automatically generate the starting corpus using the corpus-gen mode. After you run that mode, all the prerequisites needed to execute a fuzz-testing session are in place. The two last steps you might optionally want to perform are creating stopping criteria for the fuzzing campaign and manually providing some additional test cases for the starting corpus.

You can then call the GNATfuzz fuzz mode. This builds the system under test with AFL++ code instrumentation, using the AFL-GCC-fast compiler plugin. The system under test is also built with support for GNATcoverage; see the GNATcoverage User’s Guide for more information about the GNATcoverage tool.

After the system is built, you use the fuzz mode to execute one or more AFL++ fuzzing sessions. By default, GNATfuzz handles the multicore aspects of the fuzz testing campaign, such as allocating parallel fuzzing sessions of the system under test to available cores. It also periodically synchronizes the test-case queues and coverage reporting and monitors the stopping criteria between all the parallel fuzzing sessions. For details of the internal processes of a test fuzzing session, see Fig. 4.3.

Finally, all fuzzing activities will stop when the stopping criteria (either specified by you or by default) are met, at which point the final results of the fuzzer are ready for your inspection, including the set of crashing test cases, the set of test cases causing the system to timeout (the hangs), the coverage report, and the AFL++ fuzzing statistics.

In the following sections, we first provide detailed information on the various GNATfuzz modes, including their configuration, and then provide examples, first using the GNATfuzz command-line interface and then using the GNATstudio IDE GNATfuzz interface, to illustrate these modes.

4.2. GNATfuzz command line interface¶

This section describes the various possible parameters for each GNATfuzz mode. To see the detailed effect, the artifacts each switch can generate, and how these artifacts can be fed into the next mode of the GNATfuzz toolchain, see Section GNATfuzz by example.

Note

For this documentation, the ‘$’ sign denotes the system prompt.

4.2.1. GNATfuzz “analyze” mode command line interface¶

In this mode GNATfuzz has the following command line interface:

$ gnatfuzz analyze [--help|-h] [-P <PROJECT>] [-S <SPEC-FILE>] [-X <NAME=VALUE>] [-l] [-v]

where

- - -help, -h

Shows the usage information for the analyze mode

- -P <PROJECT>

Specifies the project file to be analyzed Mandatory

- -S <SPEC-FILE>

Targets the analysis at an Ada specification file within the given project

- -X <NAME=VALUE>

Sets the external variable

NAMEin the system-under-test’s GPR project toVALUE

4.2.2. GNATfuzz “generate” mode command line interface¶

In this mode GNATfuzz has the following command line interface:

$ gnatfuzz generate [--help|-h] [-P <PROJECT>] [-S <SOURCE-FILE>] [-L <LINE-NUMBER>]

[--analysis <ANALYZE-JSON>] [--subprogram-id <SUBPROGRAM-ID>]

[-o <OUTPUT-DIRECTORY>] [-X <NAME=VALUE>]

where

- - -help, -h

Shows the usage information for the generate mode

- -P <PROJECT>

Specifies the project file to be analyzed Mandatory

- -S <SOURCE-FILE>

Specifies the source file of the subprogram under test Mandatory Requires: -L Mutually Exclusive: –analysis, –subprogram-id

- -L

Line number of the subprogram under test (indexed at 1) Mandatory Requires: -S Mutually Exclusive: –analysis, –subprogram-id

- - -analysis

Path to the analysis json generated by the analyze mode Mandatory Requires: –subprogram-id Mutually Exclusive: -S, -L

- - -subprogram-id

Id of the target fuzzable subprogram found in the analysis file Mandatory Requires: –analysis Mutually Exclusive: -S, -L

- -o

Path where the generated files are created Mandatory

- -X <NAME=VALUE>

Sets the external variable

NAMEin the system-under-test’s GPR project to the valueVALUE

4.2.3. GNATfuzz “corpus-gen” mode command line interface¶

In this mode GNATfuzz has the following command line interface:

$ gnatfuzz corpus-gen [--help|-h] [-P <PROJECT>] [-o <output-directory>]

[-X <NAME=VALUE>] [-l] [-v]

where

- - -help, -h

Shows the usage information for the corpus-gen mode

- -P <PROJECT>

Specifies the fuzz-test.gpr project file that we want to generate a corpus for Mandatory

- -o

Path where the generated files are created Mandatory

- -X <NAME=VALUE>

Sets the external variable

NAMEin the system-under-test’s GPR project toVALUE

4.2.4. GNATfuzz “fuzz” mode command line interface¶

In this mode GNATfuzz has the following command line interface:

$ gnatfuzz fuzz [--help|-h] [-P <PROJECT>] [-X <NAME=VALUE>] [--sut-flags SUT-FLAGS]

[--cores CORES] [--corpus-path CORPUS-PATH] [--no-cmin]

[--input-mode INPUT-MODE] [--seed SEED] [--afl-mode AFL-MODE]

[--no-deterministic-phase] [--no-GNATcov]

[--stop-criteria STOP-CRITERIA] [--ignore-stop-criteria]

[-l] [-v]

where

- - -help, -h

Shows the usage information for the fuzz mode

- -P <PROJECT>

Specifies the project file to be analyzed Mandatory

- -X <NAME=VALUE>

Sets the external variable

NAMEin the system-under-test’s GPR project toVALUE

- - -sut-flags

Pass applications flags to the system-under-test

- - -cores

The number of cores the fuzzing campaign can utilize. If 0 (also the default if no value is passed), the campaign will utilize the maximum number of available cores. The provided value must be in the range of 0 to 255

- - -corpus-path

Path to the starting corpus to be used

- - -no-cmin

Do not run afl-cmin; afl-cmin reduces the starting corpus down to a set of test cases that all find unique paths through the control flow of the system-under-test

- - -input-mode

Specify how the test cases will be injected into the test harness. Possible alternatives: stdin, cmdline_param. Default: stdin

- - -seed

Use a fixed seed for AFL random number generators. This is mainly used for deterministic benchmarking. The provided value must be in the range of 0 to the maximum file size of the starting corpus test case files (in bytes)

- - -afl-mode

Specify the AFL_Mode to run. Possible alternatives: afl_defer_and_persist, afl_persist, afl_defer, afl_plain. Default: afl_plain

- - -no-deterministic-phase

Do not run the deterministic (sequential bit flip) mutation phase

- - -no-GNATcov

Do not run the (near) real-time dynamic coverage analysis using GNATcoverage (statement)

- - -stop-criteria

Override the default stop criteria rules with the provided

- - -ignore-stop-criteria

Ignore stop criteria rules (fuzzer must be manually stopped)

4.3. GNATfuzz by example¶

This section provides several examples to demonstrate the use of the GNATfuzz tool. These examples incrementally increase in complexity and represent the main capabilities of GNATfuzz. You can run these cases on your machine. All examples are located under:

<install_prefix>/share/examples/

4.3.1. Prerequisites and possible configurations¶

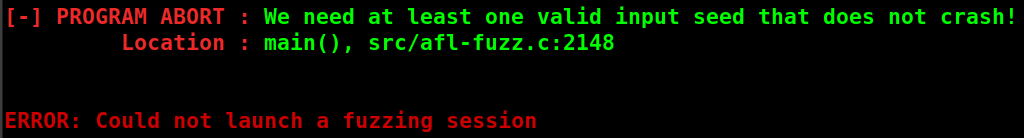

However, as discussed below, you need to be aware of some AFL++ and GNATfuzz requirements and configurations prior to using the technology.

4.3.1.1. AFL++ requirements¶

To allow the AFL++ tool, and therefore GNATfuzz, to run successfully, you

need to execute the following commands as root (either by logging in as

root or running su or sudo, as appropriate for your system) before

running any fuzzing sessions:

$ echo core >/proc/sys/kernel/core_pattern

$ cd /sys/devices/system/cpu

$ echo performance | tee cpu*/cpufreq/scaling_governor

The first command avoids having crashes misinterpreted as timeouts and the second is required to improve the performance of a fuzz-testing session. For more information, see the relevant AFL++ implementation details.

4.3.1.2. GNATfuzz requirements¶

The current requirements for GNATfuzz are:

GNATfuzz is currently only supported by GNAT Pro Ada x86 64 bit Linux distributions.

GNATfuzz requires that Ada projects be built using GPR project files and the GPRbuild compilation tool.

Warning

GNATfuzz has no specific hardware requirements but is a highly resource-intensive application. Executing a fuzz-testing session could run thousands of simultaneous tests, which can occupy the majority of the resources of a computing system. Ensure you allocate the number of cores you feel comfortable with to a fuzz-testing session (see Section 4.2.4).

4.3.1.3. GNATfuzz configurations¶

GNATFUZZ_XTERM : by default, the display of the AFL++ terminals (see

Screenshot 4.3) is disabled during a GNATfuzz

testing session. To enable their display set this environment variable.

4.3.1.4. GNATfuzz and the AFL++ execution modes¶

AFL++ provides four fuzz-testing execution modes: plain,

persistent, defer, and defer-and-persistent. You can choose one

of these via the command line switch –afl-mode when running

GNATfuzz in fuzz mode . If you don’t specify this switch, GNATfuzz

uses the persistent mode.

Different AFL++ executions modes cause the GNATfuzz fuzz-testing session to behave in different ways. These behaviors are explained in the following sections.

4.3.1.4.1. GNATfuzz and the AFL++ ‘plain mode¶

When GNATfuzz runs in AFL++ plain mode, AFL++ forks a new process

for the execution of each test. Doing so guarantees that all

application memory is reset between tests, but AFL++ plain mode has a

high overhead because forking new processes is resource-intensive.

4.3.1.4.2. GNATfuzz and the AFL++ persistent mode¶

When GNATfuzz runs in AFL++ persistent mode, AFL++ executes 10,000

tests sequentially within the same process before it spawns a new process

to run the the next set of tests. Avoiding the cost of forking a new

process for each test makes the persistent mode very efficient: the

increase in the numbers of tests executed each second can easily be 10 or

20 times faster than in AFL++ plain mode.

However, while the state of the application itself is reset between each new process, including any statically allocated state or state that is created during program elaboration, any state in dynamically allocated memory is retained between test executions within the same process.

Therefore, persistent mode requires you to completely reset dynamically

allocated memory. This ensures that resource leaks are avoided, the

application state is not impacted by a previous execution, and each test

execution is reproducible. For GNATfuzz, this applies both to any library

resources that require closure to free memory and to any dynamically

allocated state, for example, any memory allocated from a pool of available

memory (often referred to as a heap) via the Ada keyword new.

You can find an example of how to reset dynamically allocated memory in the program under test before each test execution in Section 4.3.10

Warning

Persistent mode is only suitable for programs where you can completely reset the state so that multiple calls in the same process are performed without any resource leaks and earlier runs do not have any impact on later runs. One way to see if this is happening is to look at the stability value that you can monitor through the GNATfuzz stopping criteria and at the AFL++ terminal. A good indication that the fuzz target is retaining some state between test execution is if the stability decreases. In such a case, you either need to reset all the state of the program under test (including in dynamic memory) or, when non-feasible, switch to plain mode.

4.3.1.4.3. GNATfuzz and the AFL++ defer mode¶

GNATfuzz requires the application under test to terminate within a

predefined time (the default timeout is one second). If the application

takes longer than the timeout, GNATfuzz assumes the process is in a hung

state and copies the associated test case into the hangs directory.

However, a fuzz-test session is only practical if we can maintain a high

rate of test execution, which in turn requires that most tests execute

significantly faster than the timeout.

The AFL++ defer mode recognizes that some applications have

initializations that take a long time. If such an initialization is

repeated across all tests, you can use the defer mode to perform the

initialization once and use it across all tests. Defer mode achieves

this by delaying the initial fork of the main() application until a

user-specified location is reached within the control flow.

4.3.1.4.4. GNATfuzz and the AFL++ defer-and-persistent mode¶

AFL++ defer-and-persistent mode combines the AFL++ defer and

persistent modes. See above for a detailed explanation of these

individual modes.

Note

The AFL++ defer and defer-and-persistent modes are currently not

supported by GNATfuzz, but we expect to support them in subsequent

GNATfuzz releases.

4.3.2. GNATfuzz automation capabilities and limitations¶

Section 4.1.3 provides an overview of the stages you need to use to get a fuzzing session up and running in an automated fashion, each corresponding to running one of the four operating modes of GNATfuzz; namely, analyze, generate, corpus-gen, and fuzz.

The degree to which GNATfuzz can automate a testing session is determined mainly by two factors: its ability to auto-generate test harnesses and its ability to auto-generate a starting corpus. We divide subprograms into three categories based on those two factors:

Fully-supported fuzzable subprograms: ones for which GNATfuzz can auto-generate both a test-harness and a starting corpus.

Partially-supported fuzzable subprograms: ones for which GNATfuzz can auto-generate a test-harness but not a starting corpus. In these cases, GNATfuzz provides a subprogram that allows you to provide your own seeds. GNATfuzz will encode the manually provided seeds and generate a starting corpus.

Non-supported fuzzable subprograms: subprograms for which GNATfuzz can neither auto-generate a test-harness nor a starting corpus.

Which subprograms belong to each of the three categories will evolve with future releases of GNATfuzz, with the goal of increasing automation in later releases. Also, the outcome of a GNATfuzz campaign targeting a non-compatible subprogram is dependent on the nature of the incompatibility. In some cases, the test harness will fail to compile. In other cases, the test harness will build and execute but not produce anything meaningful. In future versions of GNATfuzz, incompatible subprograms will be identified during the analysis mode.

In the current version, a test harness can be auto-generated for a subprogram unless one of the following are true:

Any of the subprogram’s “in” or “in out” mode parameters are of an Access type or contain a sub-component of an Access type.

Any of the subprogram’s “in” or “in out” mode parameter types are private.

Any of the “in” or “in out” mode subprogram’s parameters are Subprogram Access Types.

Any of the “in” or “in out” mode subprogram’s parameters are Limited types.

Any of the “out” mode subprogram’s parameters are unconstrained records or arrays.

GNATfuzz can automatically generate a starting corpus for a subprogram unless any of the following are true:

A test harness can’t be generated for the subprogram (see above).

Any of the subprogram’s “in” or “in out” mode parameters is a type other than a scalar or an array of scalars (bounded or unbounded).

Section 4.3.3 illustrates a case of a fully-supported fuzzable subprogram and Section 4.3.4 illustrates how you can fuzz a partially-supported fuzzable subprogram by providing your own seeds via a GNATfuzz-generated interface. Finally, Section 4.3.5 illustrates how to fuzz instantiations of generic subprograms.

4.3.3. Fuzzing a simple Ada subprogram¶

Let us consider this simple project:

1 2 3 4 5 6 7 8 9 10 11 12 | package body Simple is

function Is_Divisible (X : Integer; Y : Integer) return Boolean

is

begin

if X mod Y = 0 then

return true;

else

return false;

end if;

end Is_Divisible;

end Simple;

|

and:

1 2 3 4 5 6 | package Simple is

function Is_Divisible (X : Integer; Y : Integer) return Boolean;

-- checks if X is divisible by Y

end Simple;

|

This project consists of a single function that takes two integer arguments

and returns true if the first (X) is divisible by the second

(Y). The code contains a deliberate bug: division by 0 is possible. In

a few steps, let’s demonstrate how the GNATfuzz toolchain can automatically

detect this bug.

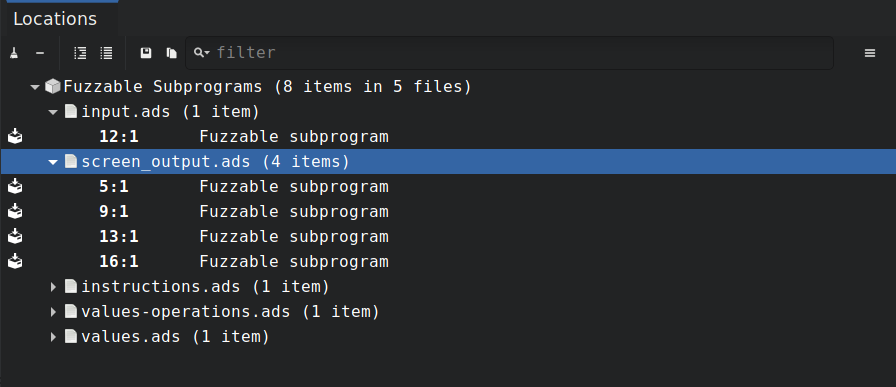

4.3.3.1. Using analyze mode¶

First, we run the GNATfuzz analyze mode on the project:

$ gnatfuzz analyze -P simple.gpr

Empowered by the Libadalang technology, this mode scans the project to

detect fuzzable subprograms and generate an analysis file under

<simple-project-obj-dir>/gnatfuzz/analyze.json, which contains a table

listing all the fuzzable subprograms detected. In the case of this project,

that file looks like:

1 2 3 4 5 6 7 8 9 10 11 12 13 | {

"user_project": "<install_prefix>/share/examples/simple/simple.gpr",

"scenario_variables": [],

"fuzzable_subprograms": [

{

"source_filename": "<install_prefix>/share/examples/simple/src/simple.ads",

"start_line": 3,

"id": 1,

"label": "Is_Divisible",

"corpus-gen-supported": true

}

]

}

|

Here we see that GNATfuzz determined that the subprogram declared in the

simple.ads specification file at line 3 is fuzzable. This corresponds

to the Is_Divisible function. You can now use the GNATfuzz generate

mode to autogenerate a test harness suitable for fuzzing.

4.3.3.2. Using generate mode¶

We can do this in one of two ways.

We can specify the location of the subprogram as given in analyze.json:

$ gnatfuzz generate -P simple.gpr -S ./src/simple.ads -L 3 -o generated_test_harness

Or we can use the unique identification number (id) for the subprogram as given in the analyze.json file:

$ gnatfuzz generate -P simple.gpr --analysis ./obj/gnatfuzz/analyze.json --subprogram-id 1 -o generated_test_harness

Either of these commands generates a new fuzz test-harness project and all

the artifacts needed to support a fuzz-test session for the selected

subprogram. These are placed under the specified output directory,

generated_test_harness in our case, with the following directory

structure:

├── generate_test_harness

│ ├── fuzz_testing -- subprogram-specific test-harness artifacts

│ │ ├── build -- corpus tools

│ │ ├── generated_src -- test-harness source code

│ │ └── user_configuration -- user configurable files

│ └── gnatfuzz_shared -- gnatfuzz lib artifacts

│ ├── ...

│ └── ...

In short:

fuzz_testing/fuzz_test.gpr- the new project file of the test-harness that wraps the subprogram-under-test to enable injecting of test cases, capturing crashes, and coverage analysis.fuzz_testing/generated_src- the test-harness source code that you should not modify under normal circumstances.fuzz_testing/user_configuration- the test-harness source code that we may modify when needed. For example you may provide its starting corpus (see Section 4.3.4).fuzz_testing/fuzz_config.json- contains all the information/configurations needed by the GNATfuzz fuzz mode to enable the fuzz-testing of the generated test-harness.fuzz_testing/build- includes all the corpus-related tools needed for GNATfuzz to automatically generate the starting corpus as well as the encoding and decoding of test cases.

4.3.3.3. Using corpus-gen mode¶

The subprogram under test is suitable for starting corpus auto-generation

(see the "corpus-gen-support": true field at line 9 of the

<simple-project-obj-dir>/gnatfuzz/analyze.json). Therefore, we can use

the following GNATfuzz command to automatically generate the starting

corpus for our fuzzing session:

$ gnatfuzz corpus-gen -P ./generated_test_harness/fuzz_testing/fuzz_test.gpr -o starting_corpus

This generates multiple test_cases under the starting_corpus directory.

Each is suitable for testing the subprogram chosen using the fuzzing

mode. The test cases are in a binary format.

Note

Currently, starting corpus auto-generation support includes all the integral types and arrays of integral types (including strings). There are ongoing efforts to increase the supported types to the full scope of Ada types. Despite this, GNATfuzz generate can produce test-harnesses for a more extensive set of subprograms, beyond the ones for which their starting corpus can be auto-generated. GNATfuzz also automatically generates a framework to assist you in manually providing the starting corpus for these cases. We demonstrate this in Section 4.3.4.

Note

The corpus-gen mode is currently in its preliminary stages. It includes only one default strategy of auto-generating a starting corpus (using the First, Middle, and Last values for scalar types). Thus, when it’s feasible to auto-generate the starting corpus for a subprogram, you can currently skip the corpus-gen mode. In this case, the fuzz mode calls the corpus-gen mode in the background before performing any fuzzing-relating activities.

4.3.3.4. Decoding an auto-generated test case¶

If you want to inspect the contents of an auto-generated test case, you can use the decoding utility, which can be found at:

<install_prefix>/share/examples/simple/generate_test_harness/fuzz_testing/build/decode_test

For example:

$ ./decode_test ./starting-corpus/GNATfuzz_1

Parameter: Param 1 [Integer] = -2147483648

Parameter: Param 2 [Integer] = -2147483648

Here, the GNATfuzz_1 auto-generated test case is decoded and its values are printed. Param 1 and Param 2 correspond to the X and Y arguments of the Is_Divisible function, respectively.

4.3.3.5. Using fuzz mode¶

Now everything is in place for fuzz-testing the selected subprogram under test. We can use the following command to launch a fuzzing session:

$ gnatfuzz fuzz -P ./generated_test_harness/fuzz_testing/fuzz_test.gpr --corpus-path ./starting_corpus/ --cores=2

This command does the following:

Builds the test harness.

Builds the system-under-test with the AFL instrumentation pass.

Builds the system-under-test with GNATcoverage.

Minimizes the starting corpus using the afl-cmin tool to reduce the starting corpus to the test cases that execute unique paths of the system under test.

Launches the AFL++ fuzz-testing session.

Collects dynamic coverage and fuzzing statistics.

Monitors the stop criteria and stops the fuzzing session if they’re met.

Displays status information.

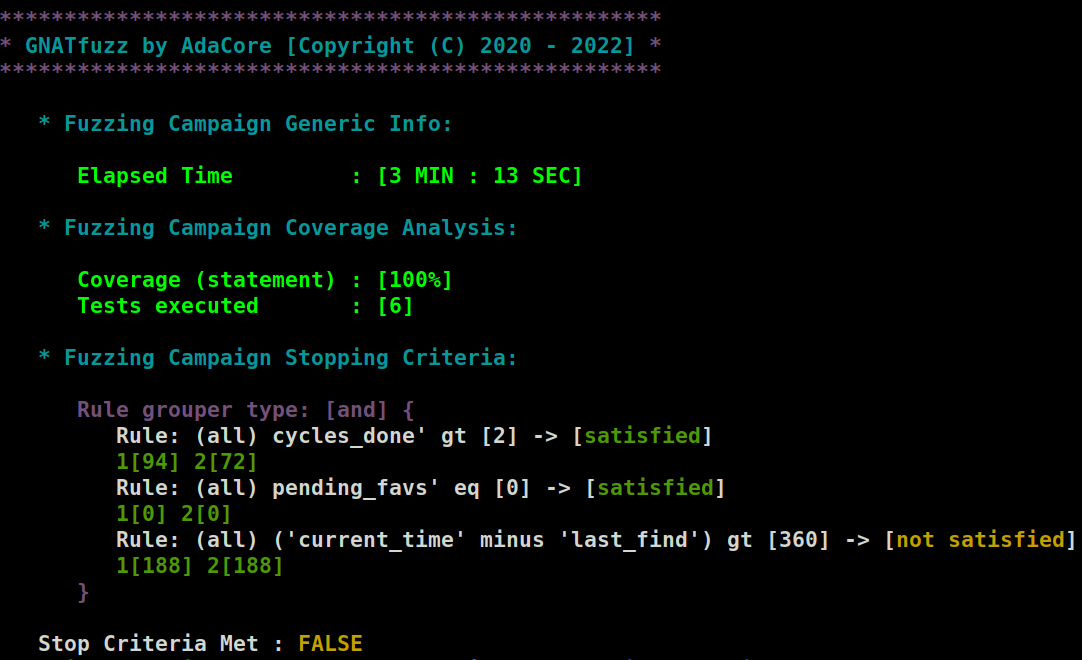

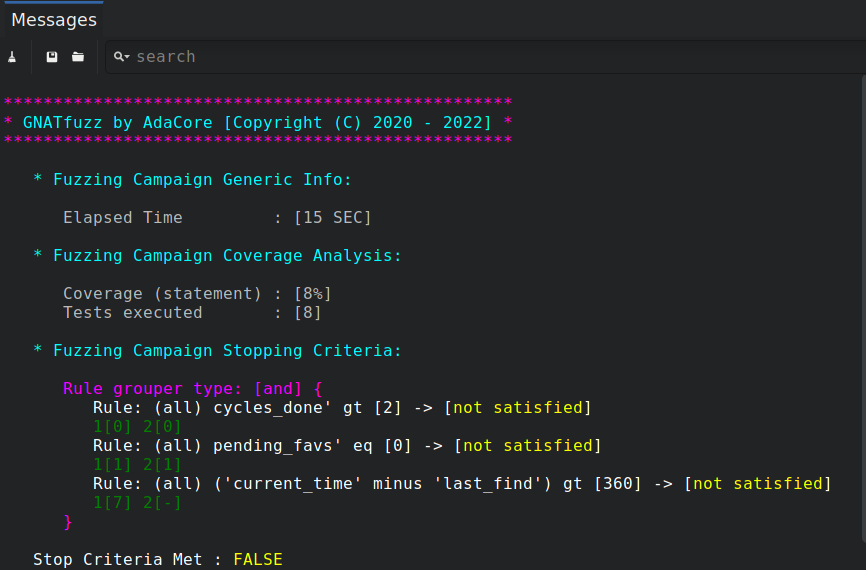

You can see the status information displayed in this example during a fuzzing session for this system under test in Fig. 4.5. GNATfuzz always displays the elapsed time since the fuzzing session was started, information on code coverage based on GNATcoverage statement coverage, and the progress on meeting the predefined stopping criteria for the session. Both the coverage information and stopping criteria sections can be disabled using the fuzz-mode’s –no-GNATcov and –ignore-stop-criteria switches, respectively.

Fig. 4.5 GNATfuzz fuzzing session status overview¶

Important

The stopping criteria are defined in XML format. GNATfuzz uses a set

of default stopping criteria if you don’t provide them via the

–stop-criteria fuzz-mode switch. The generate mode also

creates a local copy of this default stopping criteria XML file

within the auto-generated test harness. You can inspect and modify

these default criteria and can point to them by specifying the switch

--stop-criteria=<path-to-generated-test-harness>/fuzz_testing/user_configuration/stop-criteria.xml.

If you want to avoid using the stopping criteria and manually stop a

fuzzing session using ctrl+c on the terminal, you must use the

–ignore-stop-criteria fuzz-mode switch. More information

on GNATfuzz’s stopping criteria can be found in

Section 4.5.

The fuzzing command generates a session directory under

<path-to-generated-test-harness>/fuzz_testing/session: with the

following structure:

session/

├── build -- The object-files of various compilations needed

│ ├── obj-AFL_PLAIN

│ └── obj-COVERAGE

│ └── fuzz_test-gnatcov-instr

├── coverage_output -- Dynamic coverage reporting

├── fuzzer_output -- AFL++ generated artifacts

│ ├── gnatfuzz_1_master -- The "master's" fuzzing-process generated artifacts

│ │ ├── crashes -- Test-cases found to crash the system-under-test

│ │ ├── hangs -- Test-cases found to hang the system-under-test

│ │ └── queue -- Test-cases found to exercise a unique execution path

│ ├── gnatfuzz_2_slave -- The "slave's" fuzzing-process generated artifacts

│ │ ├── crashes -- Test-cases found to crash the system-under-test

│ │ ├── hangs -- Test-cases found to hang the system-under-test

│ │ └── queue -- Test-cases found to exercise a unique execution path

└── used_starting_corpus -- The starting-corpus used by the fuzzing session

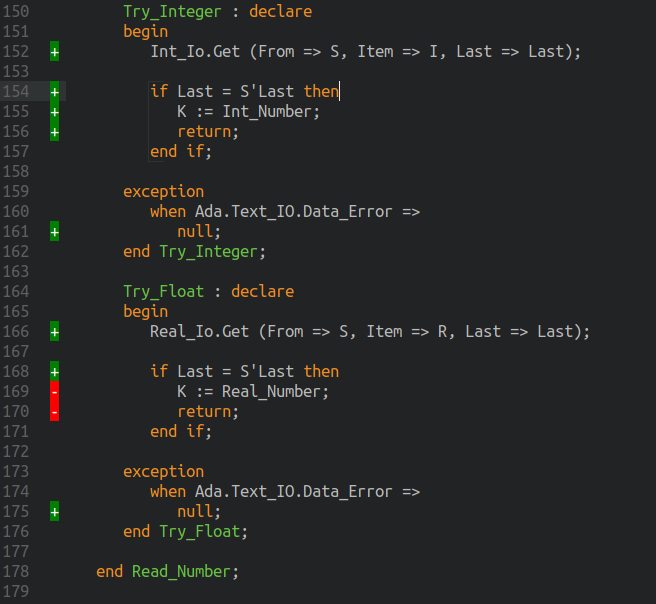

You should be aware of the following when evaluating or interpreting a fuzzing session:

Multiple fuzzing processes: The fuzz-mode switch –cores specifies the number of fuzzing processes to run. Each process is created on an individual core and generates its artifacts directory under the

fuzzer_outputdirectory. The first process is always the master (gnatfuzz_1_master) and the rest are slave processes. The more cores used, the more computing power is allocated to the fuzzing session and may speed up coverage and bug finding. But be careful not to exhaust your system resources since that will have a negative effect on fuzzing speed. In order to monitor the results of a fuzzing session, it is enough to inspect only the master’s process artifacts (“gnatfuzz_1_master”). This is because all the slave processes communicate their findings to the master process.Coverage: The dynamic coverage collected during a fuzzing session is provided in the

coverage_outputdirectory. This is statement-based coverage using the source-code instrumentation coverage provided by GNATcoverage (see source-traces-based coverage by GNATcoverage). Anindex.htmlis generated under the coverage directory, which is accessible via a browser. This allows you to navigate the coverage-information annotated code of the system under test. The coverage percentages are currently reported for the entire compilation module and not a subprogram selected from that module for fuzzing. So even though the fuzzing can execute all the statements in the selected subprogram, if the compilation module contains more subprograms than the one chosen for fuzzing, the coverage percentage may never reach 100%. The exception is if the subprogram under test performs calls to all other subprograms. You should keep this in mind when using coverage as a stopping criterion.Used Starting Corpus: The used_starting_corpus directory of the session directory is the starting corpus used by the fuzzing session. This might be the same as the autogenerated or user-provided starting corpus or might be a reduced version if you enable afl-cmin. Note that the afl-cmin is currently enabled by default by the fuzz mode of GNATfuzz (use the –no-cmin to disable it.)

AFL++ raw fuzzing stats: You can inspect the raw AFL++ generated statistics during the fuzzing session by examining the fuzzer_stats file under the master’s process artifacts directory (gnatfuzz_1_master). An example of an AFL++ status file is shown in Fig. 4.2.

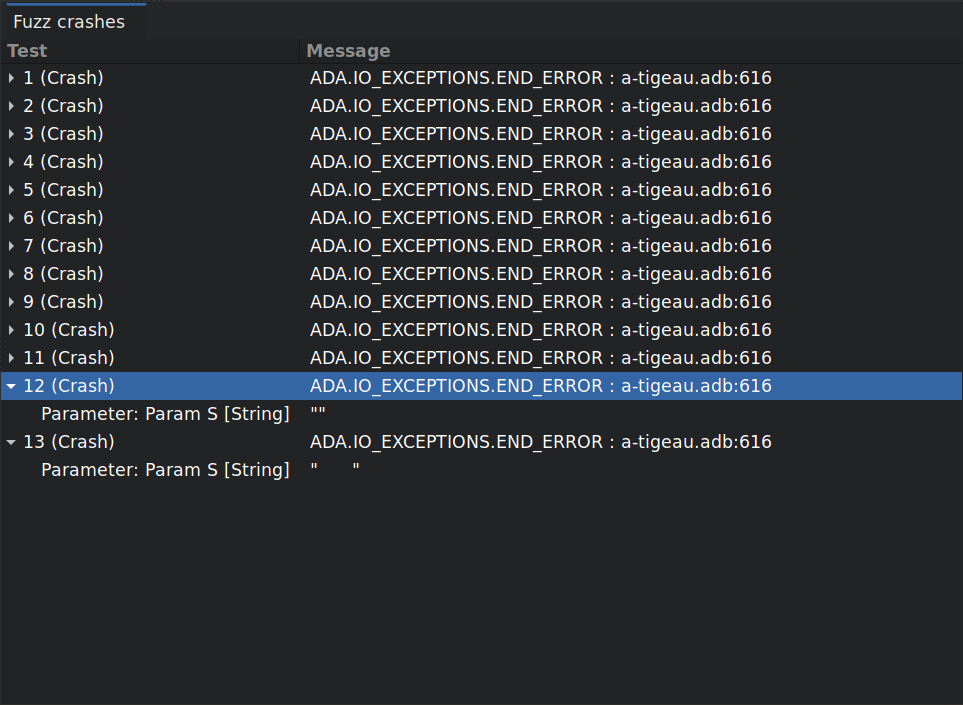

When the fuzz-testing session is completed (either because the stopping

criteria are satisfied or we killed it manually), we can check the crashes

directory to examine if the fuzzer was able to capture the expected

divide-by-zero bug in the function-under-test:

$ cd /gnatfuzz_1_master

$ ls crashes/

id:000000,sig:06,src:000000,time:64,execs:258,op:flip32,pos:4 README.txt

$ ../../../build/decode_test crashes/id\:000000\,sig\:06\,src\:000000\,time\:64\,execs\:258\,op\:flip32\,pos\:4

Parameter: Param 1 [Integer] = -1

Parameter: Param 2 [Integer] = 0

As you can see, GNATfuzz produced one crashing test case (note that the

naming of the crashing case can vary). By decoding this case, we can see

that the value for the divisor (argument Y of Is_Divisible in

Code Sample 4.6) is zero. You can now use

this crashing test to debug and correct the subprogram under test.

You can also run the AFL-generated executable of the subprogram under test with the crashing test as follows:

$ cd /gnatfuzz_1_master

$ ls crashes/

id:000000,sig:06,src:000000,time:64,execs:258,op:flip32,pos:4 README.txt

$ ../../build/obj-AFL_PLAIN/fuzz_test_harness.afl_fuzz crashes/id\:000000\,sig\:06\,src\:000000\,time\:64\,execs\:258\,op\:flip32\,pos\:4

[+] GNATfuzz : Calling User Setup

[+] GNATfuzz : Calling Subprogram Under Test

[+] GNATfuzz :

Parameter: Param X [Integer] = -1

Parameter: Param Y [Integer] = 0

[+] GNATfuzz : Exception occurred! Generating core dump. [CONSTRAINT_ERROR] [simple.adb:6 divide by zero] [raised CONSTRAINT_ERROR : simple.adb:6 divide by zero]

Aborted

4.3.4. Fuzzing an autogenerated test-harness with user-provided seeds¶

Let’s now try to fuzz the records example, which contains the following

source:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | package Show_Date is

type Months is

(January, February, March, April,

May, June, July, August, September,

October, November, December);

type Date is record

Day : Integer range 1 .. 31;

Month : Months;

Year : Integer range 1 .. 3000 := 2032;

end record;

procedure Display_Date (D : Date);

-- Displays given date

end Show_Date;

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | with Ada.Text_IO; use Ada.Text_IO;

package body Show_Date is

procedure Display_Date (D : Date) is

begin

Put_Line ("Day:" & Integer'Image (D.Day)

& ", Month: "

& Months'Image (D.Month)

& ", Year:"

& Integer'Image (D.Year));

end Display_Date;

end Show_Date;

|

First, we run the GNATfuzz analyze mode on the project:

$ gnatfuzz analyze -P records_test.gpr

This mode scans the project to detect fuzzable subprograms and generates an

analysis file <records_test-project-obj-dir>/gnatfuzz/analyze.json,

which contains a table listing all the fuzzable subprograms detected. In

our case, the analysis information file looks like this:

1 2 3 4 5 6 7 8 9 10 11 12 | {

"user_project": "<install_prefix>/share/examples/records/records_test.gpr",

"scenario_variables": [],

"fuzzable_subprograms": [

{

"source_filename": "<install_prefix>/share/examples/records/src/show_date.ads",

"start_line": 14,

"id": 1,

"corpus_gen_supported": false

}

]

}

|

By inspecting the generated analysis file, we can see there’s one fuzzable

subprogram that GNATfuzz can auto-generate a test harness for, namely, the

Display_Date. However, it can’t auto-generate a starting corpus for

it, as shown by the field corpus_gen_supported.

We then proceed to generate a test harness and try to auto-generate a starting corpus:

$ gnatfuzz generate -P records_test.gpr -S ./src/show_date.ads -L 14 -o generated_test_harness

$ gnatfuzz corpus-gen -P ./generated_test_harness/fuzz_testing/fuzz_test.gpr -o starting_corpus

INFO: * Building corpus creator.....INFO: Done

INFO: * Generating corpus...INFO: Done

ERROR: Failed to create a starting corpus - check if the User_Provided_Seeds subprogram needs completing within the User Configuration package

ERROR: ERROR_STARTING_CORPUS_EMPTY_AFTER_GENERATION

ERROR: For more details, see ./generated_test_harness/fuzz_testing/build/gnatfuzz/corpus_gen.log

As we saw, GNATfuzz was able to generate a test-harness for the given subprogram, but can’t auto-generate a starting corpus. This is because the current GNATfuzz version doesn’t yet support starting-corpus auto-generation for record types. Future versions of GNATfuzz will allow this, but in cases where automatic corpus generation is not yet supported, GNATfuzz generates an easy interface to enable you to provide its seeds, which GNATfuzz then uses to generate the starting corpus required for fuzzing:

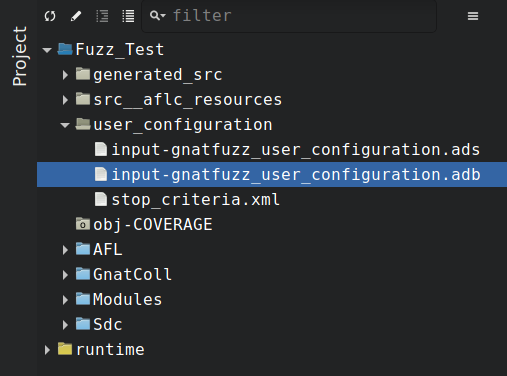

generated_test_harness/fuzz_testing/user_configuration/

├── show_date-gnatfuzz_user_configuration.adb

├── show_date-gnatfuzz_user_configuration.ads

└── stop_criteria.xml

The actual package namespace is either a child of GNATfuzz or,

when available, a child of the package holding the subprogram under

test. In this case, the package will be generated as a child package of

Show_Date.

Now we can see the User_Provided_Seeds procedure in

show_date-gnatfuzz_user_configuration.adb:

1 2 3 4 5 6 7 8 9 10 11 | procedure User_Provided_Seeds is

begin

-- Complete here with calls to Add_Corpus_Seed with each set of seed values.

--

-- For instance, add two calls

-- Add_Corpus_Seed (A, B);

-- Add_Corpus_Seed (C, D);

-- to generate two seeds, one with input values (A, B) and one with (C, D).

null;

end User_Provided_Seeds;

|

We can now edit these procedures to add seeds for the subprogram under test, as follows:

1 2 3 4 5 6 7 8 9 | procedure User_Provided_Seeds is

begin

Add_Corpus_Seed (Fuzz_Input_Param_1 => ((24, Show_Date.February, 1978)));

Add_Corpus_Seed (Fuzz_Input_Param_1 => ((29, Show_Date.March, 1978)));

Add_Corpus_Seed (Fuzz_Input_Param_1 => ((29, Show_Date.March, 1984)));

Add_Corpus_Seed (Fuzz_Input_Param_1 => ((11, Show_Date.August, 1994)));

end User_Provided_Seeds;

|

where Fuzz_Input_Param_1 is the parameter of the subprogram under test,

Display_Date (D : Date). Let’s try generating the starting corpus

again:

$ gnatfuzz corpus-gen -P ./generated_test_harness/fuzz_testing/fuzz_test.gpr -o starting_corpus

INFO: * Building corpus creator....INFO: Done

INFO: * Generating corpus...INFO: Done

By inspecting the generated starting corpus and decoding it, we can see it’s correctly generated in binary format and ready to be used for a fuzzing session:

$ ls starting_corpus/

User_1 User_2 User_3 User_4

$ ./generated_test_harness/fuzz_testing/build/decode_test starting_corpus/User_3

Parameter: Param 1 [Date] =

(DAY => 29,

MONTH => MARCH,

YEAR => 1984)

$ gnatfuzz fuzz -P ./generated_test_harness/fuzz_testing/fuzz_test.gpr --corpus-path ./starting_corpus/ --cores=1

4.3.5. Fuzzing instantiations of generic subprograms¶

For GNATfuzz to test a generic subprogram, we must provide an

instantiation. The GNATfuzz analyze mode can report the instantiation

in its generated analysis file if it consists of a fuzzable subprogram (see

Section 4.3.2 for what is considered a fuzzable subprogram). When multiple

instantiations exist, the analyze mode provides a list of all those that

are fuzzable to allow you to select the one to be fuzz-tested. When a

chain of instantiations exists, the analyze mode also reports that.

Let’s now try to fuzz the generics example, which has the following

source code:

1 2 3 4 5 6 7 8 9 10 11 12 13 | generic

type Index_Type is range <>;

type Element_Type is private;

with function "<" (L, R : Element_Type) return Boolean is <>;

package Sorting_Algorithms is

type Array_To_Sort is array (Index_Type) of Element_Type;

procedure Selection_Sort (X : in out Array_To_Sort);

procedure Bubble_Sort (X : in out Array_To_Sort);

end Sorting_Algorithms;

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | with Sorting_Algorithms;

generic

type Index_Type is range <>;

type Element_Type is private;

with function "<" (L, R : Element_Type) return Boolean is <>;

package Sorting_Multiple_Arrays is

package Sort_Array is new Sorting_Algorithms (Index_Type, Element_Type);

type Sortable_Matrix is array (Index_Type) of Sort_Array.Array_To_Sort;

procedure Selection_Sort (Matrix : in out Sortable_Matrix);

procedure Bubble_Sort (Matrix : in out Sortable_Matrix);

end Sorting_Multiple_Arrays;

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | package body Sorting_Multiple_Arrays is

procedure Selection_Sort (Matrix : in out Sortable_Matrix) is

begin

for K in Matrix'Range loop

Sort_Array.Selection_Sort (Matrix (K));

end loop;

end Selection_Sort;

procedure Bubble_Sort (Matrix : in out Sortable_Matrix) is

begin

for K in Matrix'Range loop

Sort_Array.Bubble_Sort (Matrix (K));

end loop;

end Bubble_Sort;

end Sorting_Multiple_Arrays;

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | with Sorting_Algorithms;

with Sorting_Multiple_Arrays;

package Instantiations is

type Range_Array_5 is range 1 .. 5;

package Sort_Integer_Array_Of_Range_5 is new Sorting_Algorithms

(Range_Array_5, Integer);

package Sort_Integer_Arrays_Of_Range_5 is

new Sorting_Multiple_Arrays (Range_Array_5, Integer);

end Instantiations;

|

We didn’t display the Sorting_Algorithms.adb file, which implements the

two sorting algorithms, Selection_Sort, and Bubble_Sort. You can find this

file in the example’s source directory.

First, we run the GNATfuzz analyze mode on the project:

$ gnatfuzz analyze -P generics.gpr

This generates an analysis file generated in the

<generics_test-project-obj-dir>/gnatfuzz/analyze.json file, which

contains a table with all the fuzzable subprograms detected:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 | {

"user_project": "<install_prefix>/share/examples/generic/generics.gpr",

"scenario_variables": [],

"fuzzable_subprograms": [

{

"source_filename": "<install_prefix>/share/examples/generic/src/sorting_algorithms.ads",

"start_line": 9,

"label": "Selection_Sort",

"instantiations": [

{

"id": 1,

"corpus_gen_supported": true,

"instantiation_chain": [

{

"source_filename": "<install_prefix>/share/examples/generic/src/instantiations.ads",

"start_line": 8,

"label": "Sort_Integer_Array_Of_Range_5"

}

]

},

{

"id": 2,

"corpus_gen_supported": true,

"instantiation_chain": [

{

"source_filename": "<install_prefix>/share/examples/generic/src/instantiations.ads",

"start_line": 11,

"label": "Sort_Integer_Arrays_Of_Range_5"

},

{

"source_filename": "<install_prefix>/share/examples/generic/src/sorting_multiple_arrays.ads",

"start_line": 9,

"label": "Sort_Array"

}

]

}

]

},

{

"source_filename": "<install_prefix>/share/examples/generic/src/sorting_algorithms.ads",

"start_line": 11,

"label": "Bubble_Sort",

"instantiations": [

{

"id": 3,

"corpus_gen_supported": true,

"instantiation_chain": [

{

"source_filename": "<install_prefix>/share/examples/generic/src/instantiations.ads",

"start_line": 8,

"label": "Sort_Integer_Array_Of_Range_5"

}

]

},

{

"id": 4,

"corpus_gen_supported": true,

"instantiation_chain": [

{

"source_filename": "<install_prefix>/share/examples/generic/src/instantiations.ads",

"start_line": 11,

"label": "Sort_Integer_Arrays_Of_Range_5"

},

{

"source_filename": "<install_prefix>/share/examples/generic/src/sorting_multiple_arrays.ads",

"start_line": 9,

"label": "Sort_Array"

}

]

}

]

},

{

"source_filename": "<install_prefix>/share/examples/generic/src/sorting_multiple_arrays.ads",

"start_line": 13,

"label": "Selection_Sort",

"instantiations": [

{

"id": 5,

"corpus_gen_supported": true,

"instantiation_chain": [

{

"source_filename": "<install_prefix>/share/examples/generic/src/instantiations.ads",

"start_line": 11,

"label": "Sort_Integer_Arrays_Of_Range_5"

}

]

}

]

},

{

"source_filename": "<install_prefix>/share/examples/generic/src/sorting_multiple_arrays.ads",

"start_line": 15,

"label": "Bubble_Sort",

"instantiations": [

{

"id": 6,

"corpus_gen_supported": true,

"instantiation_chain": [

{

"source_filename": "<install_prefix>/share/examples/generic/src/instantiations.ads",

"start_line": 11,

"label": "Sort_Integer_Arrays_Of_Range_5"

}

]

}

]

}

]

}

|

Let’s now focus on fuzzing the Selection_Sort generic subprogram from

the Sorting_Algorithms.ads package. In analysis file 4.22 at line 9, we see a list with two possible

instantiations of this generic subprogram, with IDs 1 and 2, respectively.

By inspecting the source code, we can see the following two instantiations:

1. instantiations.ads:Sort_Integer_Array_Of_Range_5 -> sorting_algorithms.ads:Selection_Sort

2. instantiations.ads:Sort_Integer_Arrays_Of_Range_5 -> sorting_multiple_arrays.ads:Sort_Array

-> sorting_algorithms.ads:Selection_Sort

The first is a direct instantiation of the generic Selection_Sort

subprogram and the second is an indirect instantiation of the same generic

via a chain of instantiations. By using the IDs of the instantiations, we

can generate a test-harness for the selected instantiation of the

Selection_Sort and perform a fuzz-testing session. For example:

$ gnatfuzz generate -P generics.gpr --analysis obj/gnatfuzz/analyze.json --subprogram-id 2 -o generated_test_harness

$ gnatfuzz fuzz -P generated_test_harness/fuzz_testing/fuzz_test.gpr

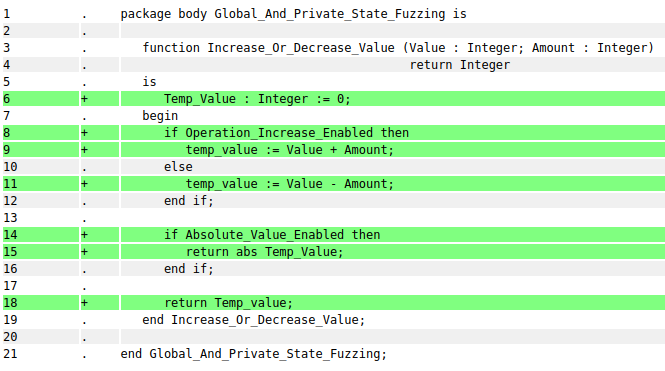

4.3.6. Fuzzing global and private state¶

The current version of GNATfuzz doesn’t support the automatic generation of

test harnesses that can fuzz global and private states, which can also

affect a subprogram’s execution. Fortunately, there are easy ways to enable

the fuzzing of such a state (to understand if it’s considered fuzzable, see

Section 4.3.2). In this example, we demonstrate a simple way of enabling the

fuzzing of global and private variables that affect the execution of a

subprogram. Let’s consider the global_and_private_state example with

the following source:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | package Global_And_Private_State_Fuzzing is

Operation_Increase_Enabled : Boolean := True;

function Increase_Or_Decrease_Value

(Value : Integer; Amount : Integer) return Integer;

-- Based on the "Operation_Increase_Enabled" this function will

-- either increase or decrease the given "Value" by the given "Amount",

-- and base on the "Absolute_Value_Enabled", it will return either the

-- absolute value or not of the selected operation's result

private

Absolute_Value_Enabled : Boolean := False;

end Global_And_Private_State_Fuzzing;

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | package body Global_And_Private_State_Fuzzing is

function Increase_Or_Decrease_Value (Value : Integer; Amount : Integer)

return Integer

is

Temp_Value : Integer := 0;

begin

if Operation_Increase_Enabled then

Temp_Value := Value + Amount;

else

Temp_Value := Value - Amount;

end if;

if Absolute_Value_Enabled then

return abs Temp_Value;

end if;

return Temp_value;

end Increase_Or_Decrease_Value;

end Global_And_Private_State_Fuzzing;

|

The operation performed by the Increase_Or_Decrease_Value subprogram is

controlled by the global parameter Operation_Increase_Enabled. The

result of the selected operation is conditionally returned as an absolute

value depending on the Absolute_Value_Enabled private variable. Let’s

try to fuzz this subprogram as is:

$ gnatfuzz analyze -P global_and_private_state.gpr

$ gnatfuzz generate -P global_and_private_state.gpr -S src/global_and_private_state_fuzzing.ads -L 5 -o generated_test_harness/

$ gnatfuzz fuzz -P generated_test_harness/fuzz_testing/fuzz_test.gpr

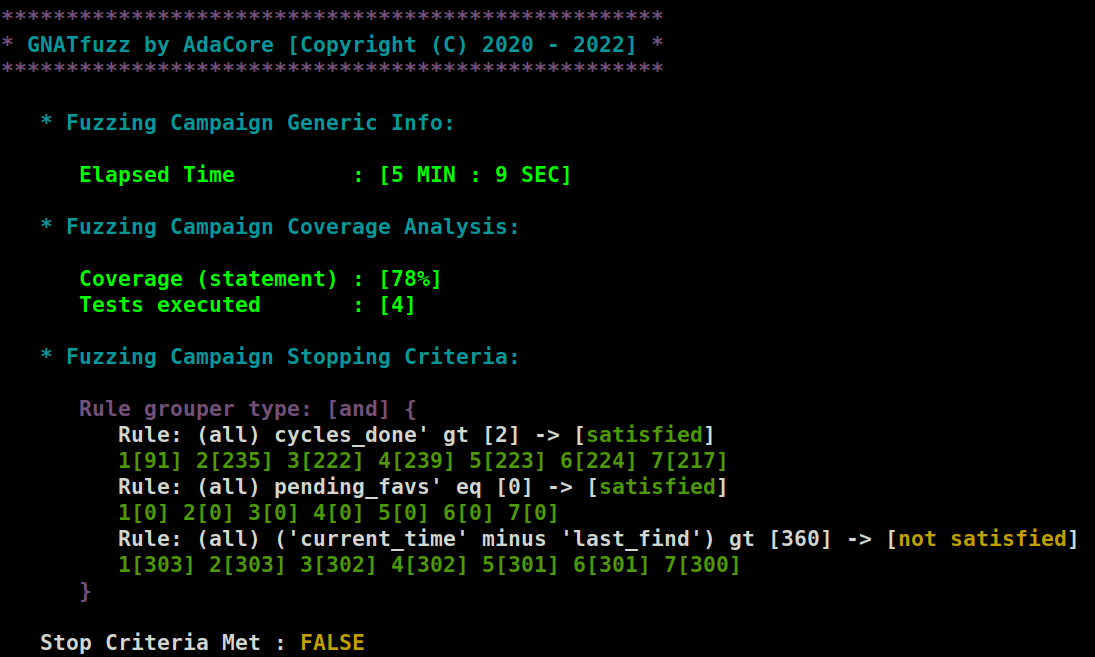

When we allow the fuzzing session to run for a while, we see that the coverage reaches 78% and doesn’t increase any further:

Fig. 4.6 Coverage can’t reach 100% due to not fuzzing the global and private state that affect the subprogram’s execution.¶

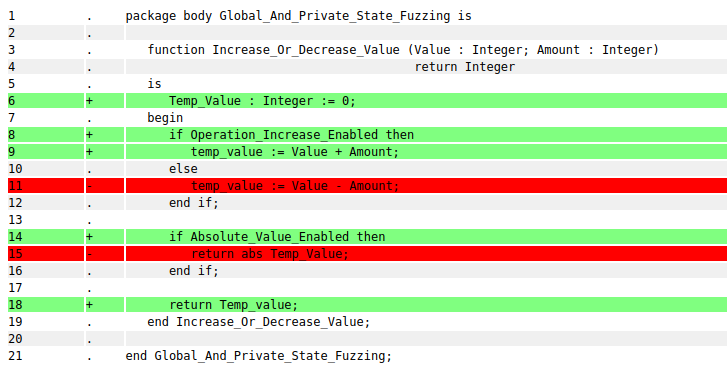

We can also examine the coverage report for the source code of the subprogram under test:

$ firefox generated_test_harness/fuzz_testing/session/coverage_output/global_fuzzing.adb.html

Because the GNATfuzz autogenerated test-harness doesn’t change the

Operation_Increase_Enabled global or the Absolute_Value_Enabled

private variable, we can see from the coverage report in Fig. 4.7 that the code at line 11 and line 15 is

never reached.

Fig. 4.7 Coverage report for the Increase_Or_Decrease_Value subprogram¶

We can add the following child package to the global_and_private_state

project to enable the fuzzing of the Operation_Increase_Enabled global

and the Absolute_Value_Enabled private variable:

1 2 3 4 5 6 7 8 9 10 11 | package Global_And_Private_State_Fuzzing.Child is

function Fuzz_Global_And_Private_State

(Value : Integer; Amount : Integer; Oper_Increase_Enabled : Boolean;

Absolute_Val_Enabled : Boolean) return Integer;

-- Wrapper function for the "Increase_Or_Decrease_Value" function

-- that takes two extra arguments which will allow the fuzzing of

-- the global and private variables that affect the execution of

-- the wrapped function.

end Global_And_Private_State_Fuzzing.Child;

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | package body Global_And_Private_State_Fuzzing.Child is

function Fuzz_Global_And_Private_State

(Value : Integer; Amount : Integer; Oper_Increase_Enabled : Boolean;

Absolute_Val_Enabled : Boolean) return Integer

is

begin

Operation_Increase_Enabled := Oper_Increase_Enabled;

Absolute_Value_Enabled := Absolute_Val_Enabled;

return Increase_Or_Decrease_Value (Value, Amount);

end Fuzz_Global_And_Private_State;

end Global_And_Private_State_Fuzzing.Child;

|

This child package applies a wrapper function,

Fuzz_Global_And_Private_State, to the function we want to fuzz test.

Creating a child of the package containing the subprogram we want to fuzz

has two benefits: the child can access the global and private state of the

parent and we don’t need to modify our original code. The wrapper has the

same parameters as the wrapped function, plus two extra: one represents the

global and the other the private variable that affect the wrapped

function’s execution state. As we can see in Source Code 4.26 on lines 9 and 10, these

extra parameters are used to assign values to the

Operation_Increase_Enabled global variable and the

Absolute_Value_Enabled private variable, respectively, and thus can

drive their values during fuzz testing of

Fuzz_Global_And_Private_State. The wrapped function,

Increase_Or_Decrease_Value, is called at line 12. This setup now

allows the fuzz testing of the Increase_Or_Decrease_Value as before,

but it now also drives the global and local state that affects its

execution.

Let’s now try to fuzz the newly created wrapper function:

$ gnatfuzz analyze -P global_and_private_state.gpr

$ gnatfuzz generate -P global_and_private_state.gpr -S src/global_and_private_state_fuzzing-child.ads -L 3 -o generated_test_harness_2/

$ gnatfuzz fuzz -P generated_test_harness_2/fuzz_testing/fuzz_test.gpr

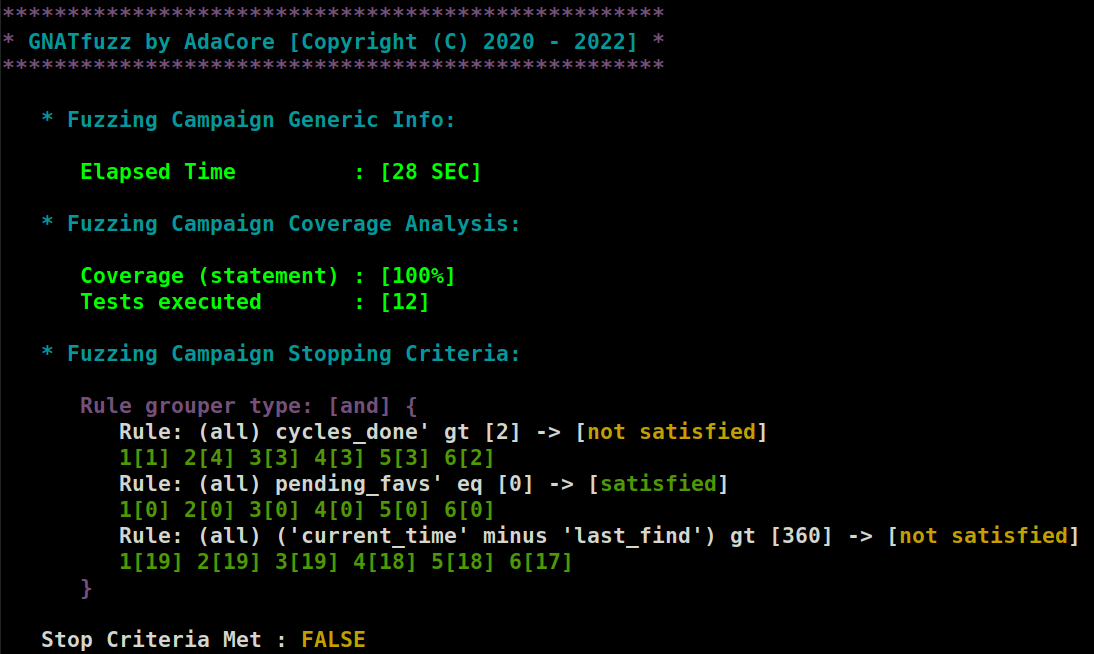

Fig. 4.8 Coverage reaches 100% when global and private variables that affect the execution of the subprogram under test are being fuzzed¶

We see that the coverage indeed reached 100% in a short time. By inspecting

the coverage report generated by GNATcoverage on the source code of the

Increase_Or_Decrease_Value subprogram, we can see that GNATfuzz now

executes all source-code lines of the Increase_Or_Decrease_Value

subprogram:

$ firefox generated_test_harness_2/fuzz_testing/session/coverage_output/global_fuzzing.adb.html

Fig. 4.9 Coverage report for the Increase_Or_Decrease_Value subprogram when

fuzzing the private and global variables that affect the subprogram’s

execution¶

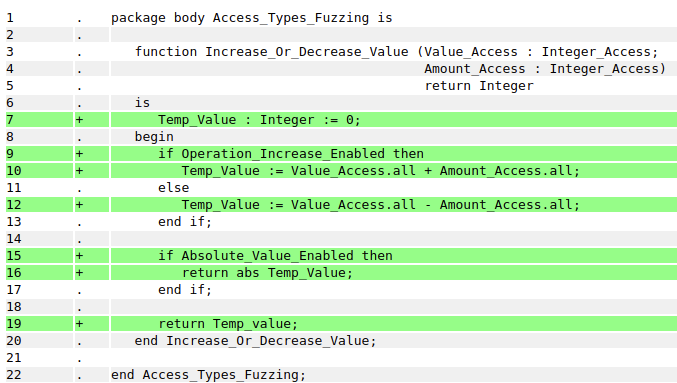

4.3.7. Fuzzing Access Types¶

Currently, GNATfuzz doesn’t support the auto-generation of a fuzz-test

harness when a subprogram takes as an argument an Access Type of any kind

(see Section 4.3.2). Fortunately, we can use the approach used to fuzz-test

global and private states (see Section 4.3.6) to fuzz test some common forms of Access Types.

More specifically, we can do this when the type of the object that the

Access Type is granting access to is fuzzable (see

Section 4.3.2). We’ll use a modified version of the example in

Section 4.3.6, namely,

the access_types_parameters example, where we update the parameters of

the Increase_Or_Decrease_Value function to be Integer` access types:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | package Access_Types_Fuzzing is

Operation_Increase_Enabled : Boolean := True;

type Integer_Access is access Integer;

function Increase_Or_Decrease_Value

(Value_Access : Integer_Access; Amount_Access : Integer_Access)

return Integer;

-- Based on the "Operation_Increase_Enabled" this function will

-- either increase or decrease the given "Value" by the given "Amount",

-- and base on the "Absolute_Value_Enabled", it will return either the

-- absolute value or not of the selected operation's result

private

Absolute_Value_Enabled : Boolean := False;

end Access_Types_Fuzzing;

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | package body Access_Types_Fuzzing is

function Increase_Or_Decrease_Value (Value_Access : Integer_Access;

Amount_Access : Integer_Access)

return Integer

is

Temp_Value : Integer := 0;

begin

if Operation_Increase_Enabled then

Temp_Value := Value_Access.all + Amount_Access.all;

else

Temp_Value := Value_Access.all - Amount_Access.all;

end if;

if Absolute_Value_Enabled then

return abs Temp_Value;

end if;

return Temp_value;

end Increase_Or_Decrease_Value;

end Access_Types_Fuzzing;

|

By providing a wrapper function to the Increase_Or_Decrease_Value

function, we enable the auto-generation of a test-harness that allows

fuzz-testing of the wrapped function. This is done similarly to the

approach used for fuzz-testing global and private variables in

Section 4.3.6:

1 2 3 4 5 6 7 8 9 10 11 | package Access_Types_Fuzzing.Child is

function Fuzz_Access_Type_Parameters

(Value : Integer; Amount : Integer; Oper_Increase_Enabled : Boolean;

Absolute_Val_Enabled : Boolean) return Integer;

-- Wrapper function for the "Increase_Or_Decrease_Value" function

-- that takes two extra arguments which will allow the fuzzing of

-- the global and private variables that affect the execution of

-- the wrapped function.

end Access_Types_Fuzzing.Child;

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | package body Access_Types_Fuzzing.Child is

function Fuzz_Access_Type_Parameters

(Value : Integer; Amount : Integer; Oper_Increase_Enabled : Boolean;

Absolute_Val_Enabled : Boolean) return Integer

is

Value_Access : constant Integer_Access := new Integer'(Value);

Amount_Access : constant Integer_Access := new Integer'(Amount);

begin

Operation_Increase_Enabled := Oper_Increase_Enabled;

Absolute_Value_Enabled := Absolute_Val_Enabled;

return Increase_Or_Decrease_Value (Value_Access, Amount_Access);

end Fuzz_Access_Type_Parameters;

end Access_Types_Fuzzing.Child;

|

Now, we can proceed with the standard GNATfuzz modes for fuzz-testing the

Fuzz_Access_Type_Parameters wrapper function:

$ gnatfuzz analyze -P access_type_parameter.gpr

$ gnatfuzz generate -P access_type_parameter.gpr -S src/access_types_fuzzing-child.ads -L 3 -o generated_test_harness/

$ gnatfuzz fuzz -P generated_test_harness/fuzz_testing/fuzz_test.gpr

Looking at the coverage report generated, we can confirm that the function

Increase_Or_Decrease_Value was successfully fuzzed:

Fig. 4.10 Coverage report for the Increase_Or_Decrease_Value subprogram when fuzzing the private, global variables, and Access Type parameters that affect the subprogram’s execution¶

4.3.8. Filtering exceptions¶

A generated GNATfuzz fuzz-test harness captures all exceptions unhandled by

the system under test and adds the test cases that caused these exceptions

to the crashes directory. In some cases, you might want to filter some

known and expected exceptions (such as an exception raised by design to

notify the calling routine of an error when a library’s API is misused) to

stop them from being captured as software bugs. The GNATfuzz-generated

test-harness provides an easy mechanism to do this.

Warning

You should be careful if you decide to filter out Ada run-time exceptions. Such exceptions, if unhandled, probably indicate software bugs or potential security vulnerabilities.

Let’s look at the filtering_exceptions example that demonstrates how to

tell GNATfuzz not to add a crash to the crashes directory when a specific

exception is raised. The source code of the example is:

1 2 3 4 5 6 7 | package Procedure_Under_Test is

Some_Expected_Exception : exception;

procedure Test (Some_Text : String);

end Procedure_Under_Test;

|

1 2 3 4 5 6 7 8 9 10 11 | with Ada.Text_IO; use Ada.Text_IO;

package body Procedure_Under_Test is

procedure Test (Some_Text : String) is

begin

Put_Line (Some_Text);

raise Some_Expected_Exception;

end Test;

end Procedure_Under_Test;

|

As shown in Source Code 4.32, the

procedure Test raises an exception at line 8. Let’s generate a test

harness for this procedure and use it to filter out this exception:

$ gnatfuzz analyze -P filtering_exceptions.gpr

$ gnatfuzz generate -P filtering_exceptions.gpr -S src/procedure_under_test.ads -L 5 -o generated_test_harness

In the generated_test_harness/fuzz_testing/user_configuration/ directory

of the GNATfuzz autogenerated test-harness, there’s a file called

procedure_under_test-gnatfuzz_user_configuration.adb, with the

following procedure:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | procedure Execute_Subprogram_Under_Test

(Some_Text : Standard.String)

is

begin

-- Call the subprogram under test. The user is not expected to change

-- this code.

Procedure_Under_Test.Test

(Some_Text => Some_Text);

-- Filter out any expected exception types here.

--

-- Ada run-time exceptions should not be filtered because they probably

-- indicate software bugs and potential security vulnerabilities if

-- captured. In such a case, GNATfuzz will place the test case

-- responsible for raising the exception under the "crashes" directory.

-- If an exception is expected, then an exception handler can be added

-- here to capture it and allow GNATfuzz to ignore it.

-- For example,

--

-- exception

-- when Occurrence : Some_Expected_Exception =>

-- null;

end Execute_Subprogram_Under_Test;

|

We can modify this in the following manner:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | procedure Execute_Subprogram_Under_Test

(Some_Text : Standard.String)

is

begin

-- Call the subprogram under test. The user is not expected to change

-- this code.

Procedure_Under_Test.Test

(Some_Text => Some_Text);

exception

when Occurrence : Procedure_Under_Test.Some_Expected_Exception =>

null;

end Execute_Subprogram_Under_Test;

|

This modification allows catching the exception before GNATfuzz will record

it as a crash. So the test which led to the exception being raised won’t be

added to the crashes directory. By providing the following custom stop

criteria, we can confirm the assumption with a fuzz-test session:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | <GNATfuzz>

<afl_stop_criteria>

<rule_grouper type="and">

<rule>

<state>coverage</state>

<operator>eq</operator>

<value>100</value>

<all_sessions>true</all_sessions>

</rule>

<rule>

<state>saved_crashes</state>

<operator>eq</operator>

<value>0</value>

<all_sessions>true</all_sessions>

</rule>

<rule>

<state>run_time</state>

<operator>gt</operator>

<value>60</value>

<all_sessions>true</all_sessions>

</rule>

</rule_grouper>

</afl_stop_criteria>

</GNATfuzz>

|

$ gnatfuzz fuzz -P generated_test_harness/fuzz_testing/fuzz_test.gpr --cores=1 --stop-criteria=stop_criteria.xml

This fuzz-testing session will stop if 100% coverage of the subprogram

under test is achieved and no crashes are found. This means that line 8 in

Source Code 4.32 will be executed, but

the raised exception won’t result in the associated test case being added

to the crashes directory. We also allow the fuzz-testing session to run

for one minute to ensure sufficient time was given to place any potential

crashing test cases in the crashes directory. Our fuzz-testing session

exits with this stopping criteria, meaning the raised exception was

successfully filtered out.

4.3.9. Fuzzing C functions through Ada bindings¶

Currently, GNATfuzz fuzz-testing automation is not supported for C programs. However, it’s possible to fuzz-test C functions through Ada bindings.

Let’s look at the testing_C_code_through_Ada_bindings example to

demonstrate how to allow GNATfuzz to fuzz-test a C function using an Ada

binding and detect a bug in the C code. The C source code of the example

is:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | #include "calculations.h"

// simple function performing some basic math operations

// which allows a division by 0

int calculations_problematic (int a, int b)

{

int temp = 0;

temp = a * b;

temp = temp + a / b;

return temp;

}

// same as previous function but prohibits the division

// by zero

int calculations (int a, int b)

{

int temp = 0;

temp = a * b;

if (b != 0)

temp = temp + a / b;

return temp;

}

|

The two functions perform the same simple calculation but the

calculations_problematic function allows a division by zero. We want to

verify that GNATfuzz is able to detect this bug by fuzz-testing the Ada

binding for this function.

The Ada bindings for the two C functions are:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | package Procedure_Under_Test is

function Calculations (A : Integer; B : Integer) return Integer

with Import => True,

Convention => C,

External_Name => "calculations";

function Calculations_Problematic (A : Integer; B : Integer) return Integer

with Import => True,

Convention => C,

External_Name => "calculations_problematic";

procedure Test

(Control : Integer;

In_A : Integer;

In_B : Integer);

end Procedure_Under_Test;

|

A possible driver procedure to test the two C functions could be:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | with Ada.Text_IO; use Ada.Text_IO;

package body Procedure_Under_Test is

procedure Test

(Control : Integer;

In_A : Integer;

In_B : Integer)

is

Result : Integer := 0;

begin

if Control < 0 then

Result := Calculations_Problematic (In_A, In_B);

else

Result := Calculations (In_A, In_B);

end if;

Put_Line (Result'Image);

end Test;

end Procedure_Under_Test;

|

Note

By default, when using gprbuild we only compile Ada source files. To compile

C code files as well, we use the Languages attribute in the GPR file and

specify c as an option: for Languages use ("ada", "c");.

By running the GNATfuzz analyze mode:

$ gnatfuzz analyze -P testing_C_code.gpr

GNATfuzz generates the following analysis file:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | {

"user_project": "<install_prefix>/share/examples/testing_C_code_through_Ada_bindings/testing_C_code.gpr",

"scenario_variables": [],

"fuzzable_subprograms": [

{

"source_filename": "<install_prefix>/share/examples/testing_C_code_through_Ada_bindings/src/procedure_under_test.ads",

"start_line": 3,

"id": 1,

"corpus_gen_supported": true

},

{

"source_filename": "<install_prefix>/share/examples/testing_C_code_through_Ada_bindings/src/procedure_under_test.ads",

"start_line": 8,

"id": 2,

"corpus_gen_supported": true

},

{

"source_filename": "<install_prefix>/share/examples/testing_C_code_through_Ada_bindings/src/procedure_under_test.ads",

"start_line": 13,

"id": 3,

"corpus_gen_supported": true

}

]

}

|

Note

As we can see, the Ada bindings for the C functions can also be directly

targeted by GNATfuzz for fuzz-testing. For this example, we are using

the Test procedure as a driver.

We can now use the generate and fuzz mode to fuzz-test our driver function:

$ gnatfuzz generate -P testing_C_code.gpr --analysis obj/gnatfuzz/analyze.json --subprogram-id 3 -o generated_test_harness

$ gnatfuzz fuzz -P generated_test_harness/fuzz_testing/fuzz_test.gpr --cores=1 --stop-criteria=stop_criteria.xml

The following stop-criteria only allows the fuzz-testing session to exit

when full coverage of the driver function is achieved (implying that both

Ada C bindings are called) and a crash occurs (which should be the

divide-by-zero allowed by the calculations_problematic C function):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | <GNATfuzz>

<afl_stop_criteria>

<rule_grouper type="and">

<rule>

<state>coverage</state>

<operator>eq</operator>

<value>100</value>

<all_sessions>true</all_sessions>

</rule>

<rule>

<state>saved_crashes</state>

<operator>gt</operator>

<value>0</value>

<all_sessions>true</all_sessions>

</rule>

</rule_grouper>

</afl_stop_criteria>

</GNATfuzz>

|

By examining the crashes directory, we can see that GNATfuzz was able

to detect the expected divide-by-zero bug:

$ cd generated_test_harness/fuzz_testing/session/fuzzer_output/gnatfuzz_1_master/

$ ls crashes/

id:000000,sig:06,src:000000,time:1212,execs:4167,op:havoc,rep:16 README.txt

$ ../../../build/decode_test crashes/id\:000000\,sig\:06\,src\:000000\,time\:1212\,execs\:4167\,op\:havoc\,rep\:16

Parameter: Param Control [Integer] = -1

Parameter: Param In_A [Integer] = 721151

Parameter: Param In_B [Integer] = 0

As we can see, GNATfuzz detected one crashing test case (note that the

naming of the case can vary). By decoding this case, we can see that the

value for the divisor (argument B passed to

Calculations_Problematic) is zero while the value of the Control

argument ensures the call to the problematic function. We can now use this

test case to debug and correct the C function. Note that arithmetic

overflows are also allowed by both C functions, so more crashes can be

detected before we get to the division-by-zero crash.

4.3.10. Resetting dynamically allocated memory when fuzz-testing with the AFL++ persistent mode¶

In Section 4.3.1.4.2, we explained that persistent mode is

only suitable for programs whose state can be completely reset between

multiple test executions.

Let’s now look at the afl_persist_reset_mem example that

demonstrates how to reset the dynamically allocated memory of the

program under test when using the persistent execution mode:

persistent mode (test_afl_persist.ads)¶1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | package Test_AFL_Persist is

Procedure Initialize (Width : Integer; Length : Integer);

private

type Floor_Plan_Type is record

Width : Integer;

Length : Integer;

Ratio : Integer;

end record;

type Floor_Plan_Access is access all Floor_Plan_Type;

Floor_Plan : Floor_Plan_Access;

end Test_AFL_Persist;

|